My Journey Towards High Resilancy

What is high resiliency?

According to Oxford Resilience means:If we apply this definition to IT/Systems administration, it means how does your environment fair to unpredictable or predictable events that have a negative effect. This can range anywhere from an update breaking services to a disaster happening at your primary location (e.g. fire, earthquake, flood, etc)

the capacity to recover quickly from difficulties; toughness.

How is resiliency measured?

It does seem pretty obvious on how it should be measured, what happens when a critical service or infrastructure component goes down. What is more debated is how much should you chase a resilient infrastructure. How many nines should you chase? Some stop at just 2 or 3 nines which allows them anywhere from 3 days to one working day of downtime. Although this is only several hours, a business would loose tens of thousands of dollars to millions for every day their infrastructure is down. There are others on the other side of the spectrum trying to achieve as many nines as possible, spending millions, if not billions into their infrastructure. In a truly highly resilient infrastructure there is no single point of failure from power feeds to servers, but also multiple geographical locations to avoid natural disaster wiping out their entire business.| Availibility (9s) | Downtime (per year) |

|---|---|

| 99% (two nines) | 3.65 days |

| 99.9% (three nines) | 8.77 hours |

| 99.99% (four nines) | 52.60 minutes |

| 99.999% (five nines) | 5.26 minutes |

| 99.9999% (Six nines) | 31.56 seconds |

| 99.99999% (seven nines) | 3.16 seconds |

Resiliency isn't all about hardware

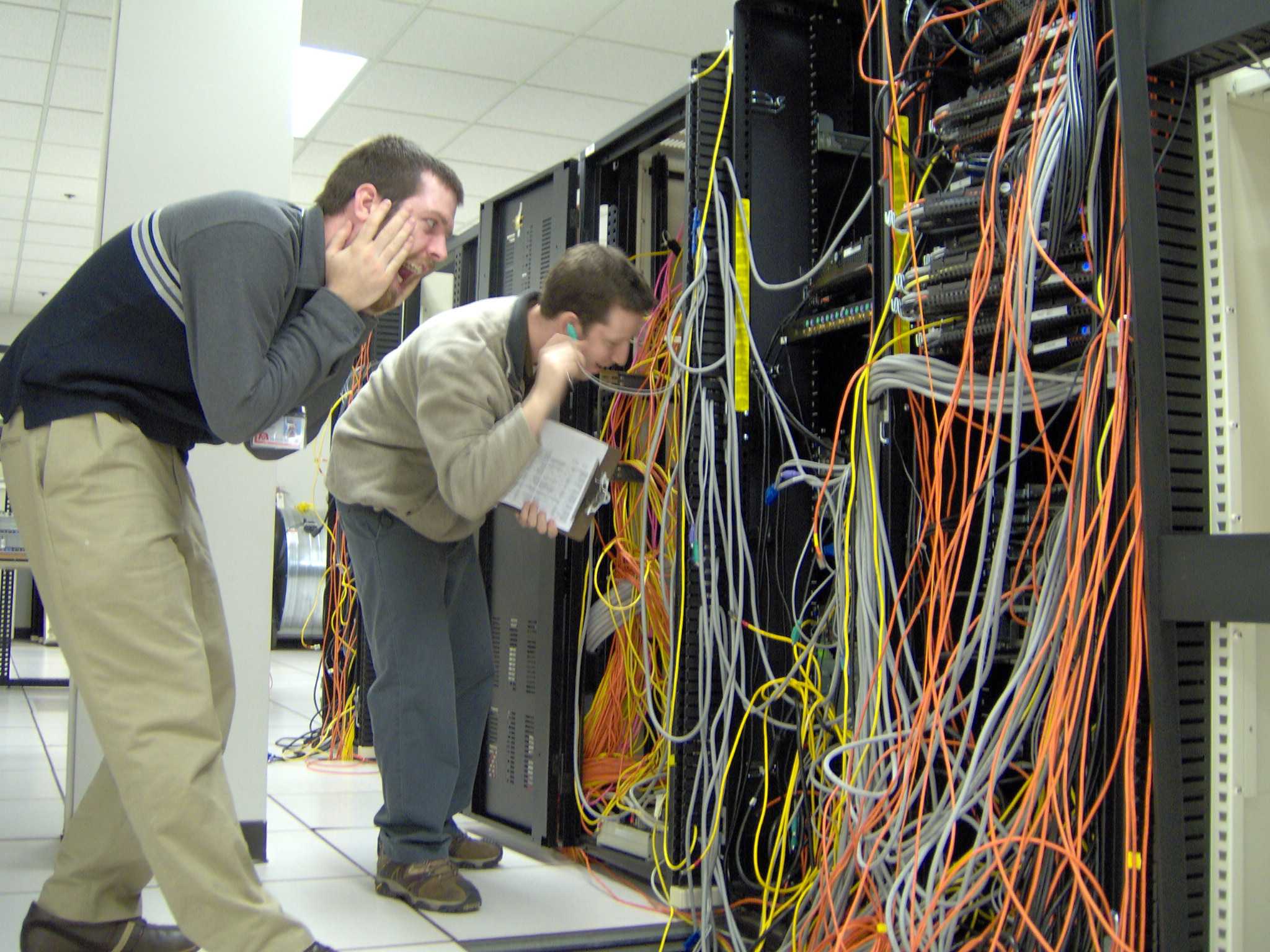

When resiliency is discussed it's often associated with High Availability but there's more to it than just how redundant your hardware is. You can create a network that doesn't have a single point of failure and can handle anything that is thrown at it, but it won't prevent the weakest link, human error. It's well known humans aren't perfect, because where's the fun in that?So how do you reduce human error. First make sure processes are in effect. For example, instead of developing on the main server and then committing the change where a developer might forget to commit changes and it gets blown away during a deploy, redevelop the process so the developer works locally and works does pull requests. In addition make the remote folder only writable to the deploy user to prevent accidental changes by forgetful developers. Second automate what you can. Computers excel at one thing and that's doing things consistently. Even the most detail oriented sysadmin/developer can make mistakes after doing a process hundreds of times but a computer will for the most part do what it's told to do consistently.

The third part while not directly related to resilience is how you respond to failure. Do you point fingers at developers for bad code or do you just always blame DNS? When a failure happens it should be discussed how did this happen and how can this prevented in the future. In other words a Junior sysadmin or developer should never be able to bring down your infrastructure by bringing down one system. That's not to knock on Jrs, we've all had time(s) where we accidentally put the wrong flag in a command and knocked a critical service offline.

The fourth and final part is testing your processes and infrastructure. I think Netflix has the right approach to this with their program ChaosMonkey., What this program does is create chaos by terminating random instances. Almost like a monkey came in and started pulling random things. While you don't have to create an internal program or use ChaosMonkey you should test as frequently as possible. This helps give you peace of mind if unfortunate disaster does strike.

How I Plan on Implementing High Resiliency.

Now after reading all this you may be wondering how I plan to implement this myself. This are two disntinct parts. First my homelab, which i've been working towards making HA for a year. I've introduced 2 more Hypervisor machines on top of the one I already have. I've created redundant network storage, for the vms. While I've gotten farther than some businesses I have plenty to continue on my homelab to make it truly highly resilient. The second part is making my website resilient. Though currently small, I plan to make it resilient enough to put many businesses SLAs to shame. This includes building redundant cloud infrastructure but also creating an automated process from instance configuration to automated deployment of my website.This is the first part of my series for my journey to high resiliency, I plan on writing on every part of the process as I learn both the upsides and downsides of building a resilient infrstructure.

Comments

Post a Comment